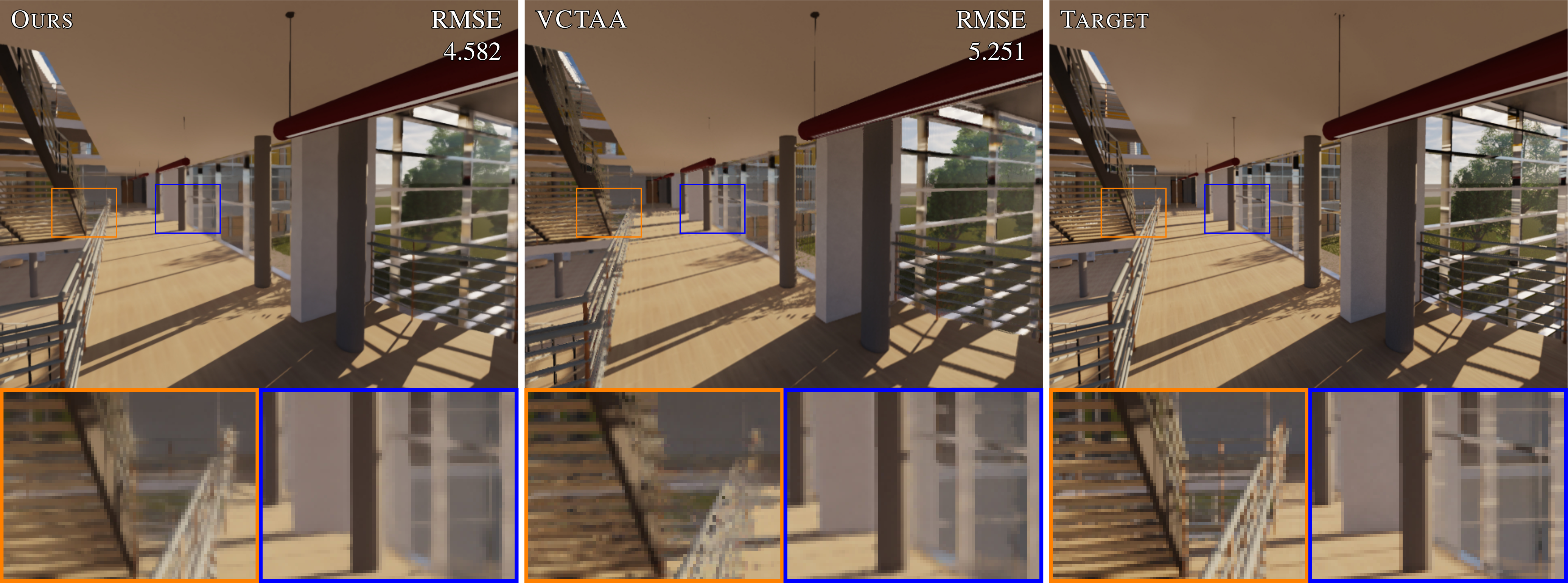

Comparison of temporal anti aliasing (TAA) during fast camera movement with a 16x supersampled ground truth image (right).

Our small neural network (left) correctly resolves the railing and stairs that are distorted by Variance Clipping TAA (middle).

Abstract

Existing deep learning methods for performing temporal anti aliasing (TAA) in rendering are either closed source or rely

on upsampling networks with a large operation count which are expensive to evaluate. We propose a simple deep learning

architecture for TAA combining only a few common primitives, easy to assemble and to change for application needs.

We use a fully-convolutional neural network architecture with recurrent temporal feedback, motion vectors and depth

values as input and show that a simple network can produce satisfactory results. Our architecture template, for which we

provide code, introduces a method that adapts to different temporal subpixel offsets for accumulation without increasing

the operation count. To this end, convolutional layers cycle through a set of different weights per temporal subpixel

offset while their operations remain fixed. We analyze the effect of this method on image quality and present different

tradeoffs for adapting the architecture. We show that our simple network performs remarkably better than variance clipping

TAA, eliminating both flickering and ghosting without performing upsampling.

This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0) enabled by Projekt DEAL.

Downloads

Bibtex

@inproceedings {10.2312:hpg.20231134,

booktitle = {High-Performance Graphics - Symposium Papers},

editor = {Bikker, Jacco and Gribble, Christiaan},

title = {{Minimal Convolutional Neural Networks for Temporal Anti Aliasing}},

author = {Herveau, Killian and Piochowiak, Max and Dachsbacher, Carsten},

year = {2023},

publisher = {The Eurographics Association},

issn = {2079-8687},

isbn = {978-3-03868-229-5},

doi = {10.2312/hpg.20231134}

}