Journal of Computer Graphics Techniques Vol. 9 (2), 2020

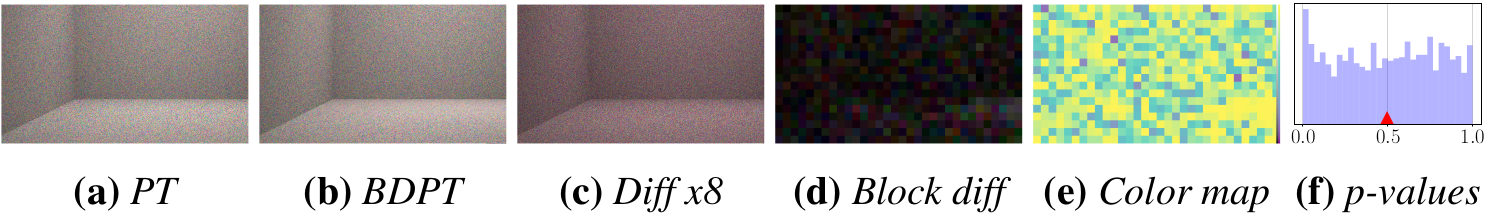

Welch’s t-test in an empty, gray box: (a) an unbiased forward path tracer reference

implementation compared to (b) a biased bidirectional path tracer, each limited to paths with

three vertices, and 10 samples per pixel. The two center images show the difference (c) and

tile-wise difference (d), revealing hardly any bias. Welch’s t-test outputs a color map (e)

revealing bias to the right and a non-uniform histogram of p-values (f) as strong evidence that

(a) and (b) will not converge to the same image with more samples.

Abstract

When checking the implementation of a new renderer, one usually compares the output to that of a reference implementation. However, such tests require a large number of samples to be reliable, and sometimes they are unable to reveal very subtle differences that are caused by bias, but overshadowed by random noise. We propose using Welch’s t-test, a statistical test that reliably finds small bias even at low sample counts. Welch’s t-test is an established method in statistics to determine if two sample sets have the same underlying mean, based on sample statistics. We adapt it to test whether two renderers converge to the same image, i.e., the same mean per pixel or pixel region. We also present two strategies for visualizing and analyzing the test’s results, assisting us in localizing especially problematic image regions and detecting biased implementations with high confidence at low sample counts both for the reference and tested implementation.

This paper was invited for a talk at I3D 2021.

Downloads

Bibtex

@article{Jung2020Bias,

author = {Alisa Jung and Johannes Hanika and Carsten Dachsbacher},

title = {Detecting Bias in Monte Carlo Renderers using Welch’s t-test},

year = 2020,

month = {June},

day = 13,

journal = {Journal of Computer Graphics Techniques (JCGT)},

volume = 9,

number = 2,

pages = {1--25},

url = {http://jcgt.org/published/0009/02/01/},

issn = {2331-7418}

}