In ACM Transactions on Graphics 38:4, Article 136 (issue for SIGGRAPH 2019)

Abstract

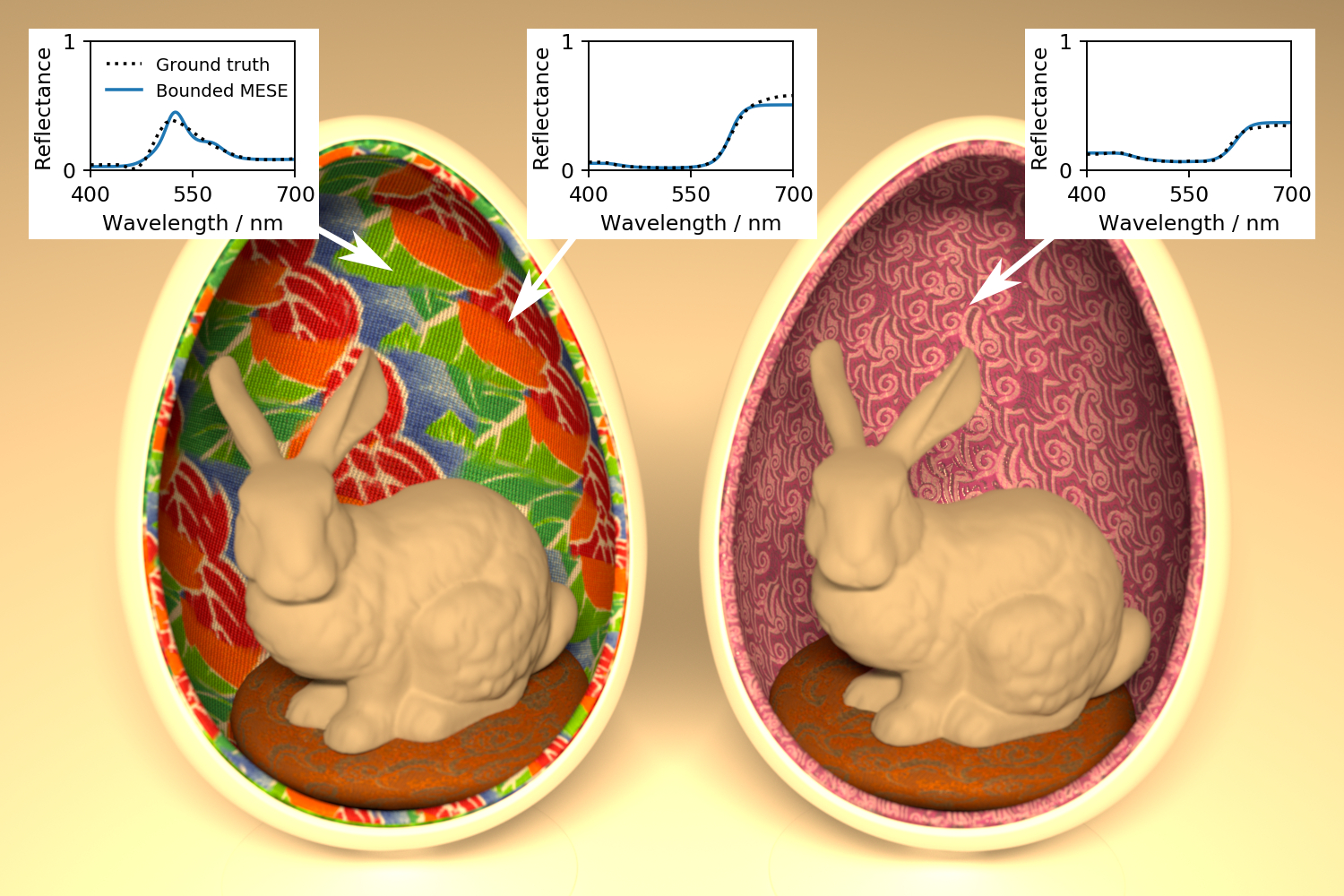

We present a compact and efficient representation of spectra for accurate rendering using more than three dimensions. While tristimulus color spaces are sufficient for color display, a spectral renderer has to simulate light transport per wavelength. Consequently, emission spectra and surface albedos need to be known at each wavelength. It is practical to store dense samples for emission spectra but for albedo textures, the memory requirements of this approach are unreasonable. Prior works that approximate dense spectra from tristimulus data introduce strong errors under illuminants with sharp peaks and in indirect illumination. We represent spectra by an arbitrary number of Fourier coefficients. However, we do not use a common truncated Fourier series because its ringing could lead to albedos below zero or above one. Instead, we present a novel approach for reconstruction of bounded densities based on the theory of moments. The core of our technique is our bounded maximum entropy spectral estimate. It uses an efficient closed form to compute a smooth signal between zero and one that matches the given Fourier coefficients exactly. Still, a ground truth that localizes all of its mass around a few wavelengths can be reconstructed adequately. Therefore, our representation covers the full gamut of valid reflectances. The resulting textures are compact because each coefficient can be stored in 10 bits. For compatibility with existing tristimulus assets, we implement a mapping from tristimulus color spaces to three Fourier coefficients. Using three coefficients, our technique gives state of the art results without some of the drawbacks of related work. With four to eight coefficients, our representation is superior to all existing representations. Our focus is on offline rendering but we also demonstrate that the technique is fast enough for real-time rendering.

Notes

This technical paper will be presented at SIGGRAPH 2019 on 1st of August 2019. The author’s version has been published on 2nd of June 2019.

Downloads